26

2023Exposure Values are a somewhat important concept, kind of, if you are trying to maintain consistent exposure while fiddling with your camera in manual mode. Realistically, by shooting in aperture or shutter priority, with your ISO locked down, your camera will maintain the proper exposure value for you anyway.

A photographer might need to prepare for any values between -6 and 16, and the difference of 1 exposure value is called a “stop”. Remember, though “stopping down” generally refers to using a narrower aperture, you can also decrease your shutter speed or ISO by one stop!

The actual math of this does not matter too much to a normal photographer. Or an abnormal photographer. It’s somewhat fascinating to think about this if you are designing experiments or writing software that does crazy camera things though.

Honestly, if you’re doing crazy math-based camera experiments, you probably didn’t need to read this. However, I’m upset that the equations for the exposure triangle (which dictate the relationship between iso/shutter speed/f-number) are not readily available without you solving for these elements yourself, so I figured I’d commit them to one place on the internet. If you need these, look no further.

Note many resources will give versions of these equations using EV_100, or Exposure Value at ISO100. No standards body has codified use of EV_100, and while some websites claim that “Light Value” refers to non-ISO100 EV (and sometimes the opposite, EV_100), this is inconsistent with contradicting definitions around the internet. Forget about light value, for now it’s not a useful term, it’s the math photography equivalent of “nonplussed”. Just use these equations, and if you want ISO100 or EV_100 plug in 100 for the ISO.

If these all feel like they are yielding answers that are just a littttle off- welp, congratulations, you’ve learned the dirty secret of photography. The fnumbers are very, very slightly rounded. Below is mathematically sound, where:

- F is the relative aperture (f-number)

- t is the exposure time (“shutter speed”) in seconds

- S is the ISO arithmetic speed

- EV is the exposure value

ISO

S = \frac{100 \times F^2}{t \times 2^{EV}}iso = (100 * (fnumber ** 2))/(shutter_speed * (2 ** exposure_value))APERTURE

F = \sqrt{ \frac{S \times t \times 2^{EV}}{100} }import math

fnumber = math.sqrt(((iso*shutter_speed) * (2 ** exposure_value))/100)SHUTTER SPEED

t = \frac{100 \times F^{2}}{S \times 2^{EV}}shutter_speed = (100 * (fnumber ** 2))/(iso * (2 ** exposure_value))EXPOSURE VALUE

EV = \log_2(\frac{100 \times F^2}{S \times t})import math

exposure_value = math.log2((100 * (fnumber ** 2))/(iso * shutter_speed))There!

If these are useful for even one photography nerd blossoming into an engineer nerd, then this was completely worth it!

18

2019While I was waiting for burlesque bunny models to prepare for an upcoming shoot, a Danish traveler wandered into our set. She was goodly enough to pose so I could try out my brand new lens, which I threw on the nearest camera, my modified infrared Nikon. As has been demonstrated in past explorations, this is not the sharpest sensor, as there is no guarantee that a critical focus plane will even exist if your aperture is too open.

To my surprise, the new Daguerrotype Achromat lens, a recreation of the Petzlav design from 1839, worked perfectly with this modification. Apparently simplistic configurations are sharp as a tack in infrared, even while wide open!

As she was captured, straight out of camera:

Of course, this is barely even a photograph. Let’s adjust this, and make it black and white:

Great!

But what if we want color?

As we all know, infrared pictures look really weird in traditional “false color”, which is how modern digital photographers often present their infrared work. Obviously, it’s impossible to present an invisible spectrum without modifying it to become visible, so SOME color has to be given to it. Further articles on traditional false coloring will come, but a cursory Googling will provide a litany of tutorials.

As an example, let’s look at the lovely Madeleine in this original infrared photo, after it’s been white balanced to pull the highlights into a blue range. She looks weird. And she’s not a weird looking girl:

We swap the Red and Blue channel, as is the standard jumping off point. Ummm… Great? Now she looks weird AND sickly. We have done no good here.

But perhaps there is another way? I’ve been poking at machine learning for some separate projects, so maybe we can use an artificial intelligence to fill in some gaps for us.

I’ve been interested in the work of Richard Zhang and the work he did with Phillip Isola and Alexei A. Efros on using artificial intelligence to colorize things. It seems a solid use of “Deep Learning” to train an AI on a large set of images, then have it color an image for you. You can read more about here, if you want! http://richzhang.github.io/colorization/

Let’s pop the first black and white image from above into Zhang, Isola, and Efros’ automatic artificial-intelligence colorizer, a demo of which he provides freely at https://demos.algorithmia.com/colorize-photos/

Interesting. Kind of.

Ultimately we see that AI recognizes that there is, in fact, a girl, and the AI’s model (think of this as the memories the AI has retained from past studies) recognizes foliage pretty well.

The only problem is, I’m not interested in foliage turning green, or the model’s eyes turning brown. I don’t much care for the colors bleeding out of the edges, either. I came to make a candy-colored infrared nightmare, and make a candy-colored infrared nightmare I shall!

Instead, I did more reading, and found this paper, a further exploration that involved Zhang, Isola and Efros. https://richzhang.github.io/ideepcolor/ By “steering” an AI, pointing to where certain colors should be, perhaps we can make a more interesting picture?

I followed some simple directions to run this code on a Mac, and within a few minutes of getting the program started, I had colorized a version I liked! No training or reading instructions was necessary. It was largely very intuitive.

To use iDeepColor, simply mouse-over a spot on the image on the left, click, wait a second, and see what the AI recommends. Chances are pretty good many of the points are going to already be a reasonable value, so simply click a point of color you like, and when the palette is recommended, do nothing. Click to a point where you’d like to change the color, then choose a color in the a b color gamut. I believe this to be a* b* channels, from the 1976 CIE L*a*b* space, but we’ll explore this later. You may have to get finicky, adding a few points around an area you’ve just defined, to keep the color from bleeding out.

Honestly, despite the frustration of not having a “zoom” ability, or an “undo” function, this was so intuitive there seems to be little left for me to explain.

After about 20 minutes futzing about, I was left with this:

Is it perfect? No. Is it novel? Absolutely! In fact, it gives me some ideas for colorizing more competently in Photoshop for future endeavors.

I hope this inspires some creative steps with colorization and AI in hyperspectral photography!

09

2019After reading my post on how to recreate the look of tintype and wet-plate collodion digitally, you may have been left wanting the code to appropriately demosaic/debayer.

This is a photography blog, not a coding blog, so please bear with me for my first go at making this accessible.

To recap from my earlier posts:

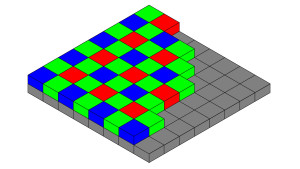

In looking at an image captured through a B25 glass, you will only capture 1 out of every 4 pixels correctly, thanks to the debayer color filter array (CFA) on top of your digital camera’s sensor. That means 3 pixels are “dead” or, at least “incorrect”. Here’s a quick script to throw them out.

I know I will get some feedback that this is not a debayer, or demosaic, in any traditional sense. You are technically right, haters. This is simply a downsample to strip out the three dead pixels.

You will quarter the resolution of your image with this process. I’m sorry, but it has to happen. The resulting clarity will play nicely with any upscaling processes you then wish to engage upon, however, and, especially in portraiture, artificial intelligence can work wonders.

I originally wrote this in MATLAB, but instead let me give it to you with freeware.

Software Requirements

You will need the following command-line tools. These are free, but if you aren’t comfortable using a command line interface (CLI) then I have bad news for you- this is unfortunately how you’re going to have to do it.

ImageMagick – This is THE tool for altering an image from the command line. It exists for all platforms, so whatever computer you are running, you can use it.

DCRaw – This is THE tool for opening raw files from a digital camera. RawTherapee is a front-end for this tool. Unfortunately, you still will have to install it, even if you have RawTherapee, to use the command-line interface.

I recommend, if you are on a Mac/Linux, simply using homebrew to grab these- open up a terminal window, and type

homebrew install imagemagick

and then

homebrew install dcraw

On PC, this might be a bit more complex, but these tools exist for all platforms, which is why I chose to code in this fashion.

Assuming these are installed correctly, you should be able to run this single line of code to demosaic down to the blue sensor pixel.

The Code

Open a terminal window, navigate to where your RAW file lives, and type:

dcraw -c -D -T -6 -g 2.4 12.92 -o 1 YOURINPUTFILE.(NEF.RAW) | convert - -roll +1+1 -sample 50% YOUROUTPUT.TIF

This tells DCRaw:

(-c) Output in a fashion that can be handed off to ImageMagick (STDOUT for piping)

(-D) Monochromatic, untouched output

(-T) Tiff

(-6) 16 bit

(-g 2.4 12.92) gamma 2.4 with a toe-slope of 12.92, which is, more simply put, the appropriate setting for sRGB.

(-o 1) Set the colorspace to sRGB with a d65 white point. You can tinker with this, but ultimately it shouldn’t make too big of a difference, since we are compressing to a monochromatic pipeline here regardless.

Note that some tutorials will tell you to use a -4. This will output a linear electro-optical transfer function, and unless you really know what you’re doing, you probably don’t want this. I would argue you really don’t want this.

This then pipes ( | )the data to ImageMagick- or rather the utility it provides named “convert”:

(sample 50%) Downsample at 2:1, implied that you are dropping every other pixel in both X and Y directions

(-roll +1+1) Offset your image by the requisite number of pixels to place a blue pixel at the top left of the image. Assuming RGGB, this is +1+1. If you have BGGR, then this will be +0+0. I recommend trying them both. Heck, you can try +0+1 and +1+0 to see what would typically be the two green pixels. The one that looks the brightest is your correct image.

To test these I ran:

dcraw -c -D -T -6 -g 2.4 12.92 -o 1 DigitalCollodion_D610-1297copy.nef | convert - -roll +0+0 -sample 50% OutputTest_A.tif

dcraw -c -D -T -6 -g 2.4 12.92 -o 1 DigitalCollodion_D610-1297copy.nef | convert - -roll +0+1 -sample 50% OutputTest_B.tif

dcraw -c -D -T -6 -g 2.4 12.92 -o 1 DigitalCollodion_D610-1297copy.nef | convert - -roll +1+0 -sample 50% OutputTest_C.tif

dcraw -c -D -T -6 -g 2.4 12.92 -o 1 DigitalCollodion_D610-1297copy.nef | convert - -roll +1+1 -sample 50% OutputTest_D.tif

Not surprisingly, OutputTest_D matches the blue debayer pixel for my Nikon.

Note this is for a traditional bayer filter. You might wish to review the description of color filter arrays, and see if there is a better fit for you and your sensor. I don’t have the foggiest of how this might be accomplished with an x-Trans color array filter with free command-line tools, unfortunately.

03

2019Using Careful Science and Pretty Faces

While I was visiting San Francisco, Kristy Headley, a dear friend and fellow engineer, showed me her studio. There I was lucky enough to sit for her while she did some vintage tintyping. Tintyping was one of the earliest forms of photography, popular in the 1850s. It was a kind of incredibly inconvenient polaroid- the photos were exposed instantly after a quick wash on plates of iron or aluminum (never actually tin). All you needed was a very, very large camera, plates treated with “collodion” to make a light-reactive surface, and a sizeable collection of chemicals- Unfortunately, the genuine process has a high risk of explosion, creating cyanide gas, incurring blindness from silver halide splashback, or getting whacked out on ether.

Tintype/ferrotype by Quinn Jacobson – CC BY-SA 3.0

Kristy showed me each painstaking step, and I was enamoured with the result. With her mastery of lighting techniques, she’d produced tintype photographs where every detail popped- every freckle, hair, and eyelash seemed to stand out. Yet, these looked very different from modern photography. There was a certain, seemingly unquantifiable trait to them. Why?

I sought out to quantify this ol’ timey look, and the fashion by which one could reproduce it with a digital camera. Perhaps I could share in this delight without keeping jugs of volatile chemicals in my room.

Look to the Spectrum!

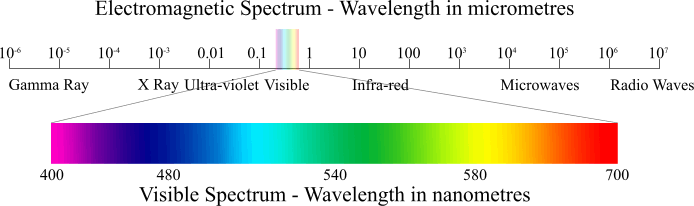

Kristy had told me that her exposure plates were only sensitive to certain colors: UV, blue, and some green. There was a specific range of wavelengths this collodion emulsion was sensitive to- one that doesn’t match human vision.

Human vision, as we can see above, is a sensitivity to the electromagnetic spectrum. Electromagnetic waves between about 400 and 700 nanometers will be perceived as light to us- the seven colors of the rainbow! (Interesting article about this to come later) If we dip to wavelengths shorter than 400, we get imperceivable ultraviolet (UV) light, and rise to wavelengths above 700, we get imperceivable infrared (IR) light.

But what do vintage photographic emulsion surfaces see?

Engineer Niles Lund seems to be the only person who has researched the spectral sensitivity of collodion, the emulsion chemical stew used to make wet-plate and tintype photographs in the mid-1800s. After exchanging a few emails, Niles sent me this updated spectral chart.

So now we know what to recreate if we want “digital collodion”! We need to cut out the upper half of the visible spectrum allowing nothing above about 520 nanometers into the camera.

I’ve done much work in this field, so I thought this would be a great time to blend science and art to explore this concept, and compare it to other hyper-spectral imagery!

Setting up an experiment

Now we invite some friends and models to come sit for us! I asked the models to avoid skin-concealing makeup and zinc-oxide sunscreen, which is the only sunscreen to obstruct UV-A rays. They were asked to model under a broad-spectrum light source (the sun), with an additional light source of a silver reflector, and maintain a pose for a few minutes while I photograph each under the following conditions:

Visible Spectrum

This is what humans see (obviously). Using three cones, sensitive to three different ranges of wavelengths, which loosely map to red-green-blue, we see everything from violet to red. Violet is the shortest wavelength. Red is the longest. Not surprisingly, this is what digital cameras see, as well. Silicon photovoltaic sensors, whether CCD or CMOS, can take in a theoretical 290nm to 1100nm. However, it would be maddening to have your photography overexposed, blooming, and flaring due to lightwaves you cannot see, to say nothing of threats of overheating, so sensors are limited by optical filters placed directly over the sensor to only photograph, more-or-less, in the human visible spectrum. Photons that don’t play nice in this range are either bounced back out of the lens, or absorbed and destroyed.

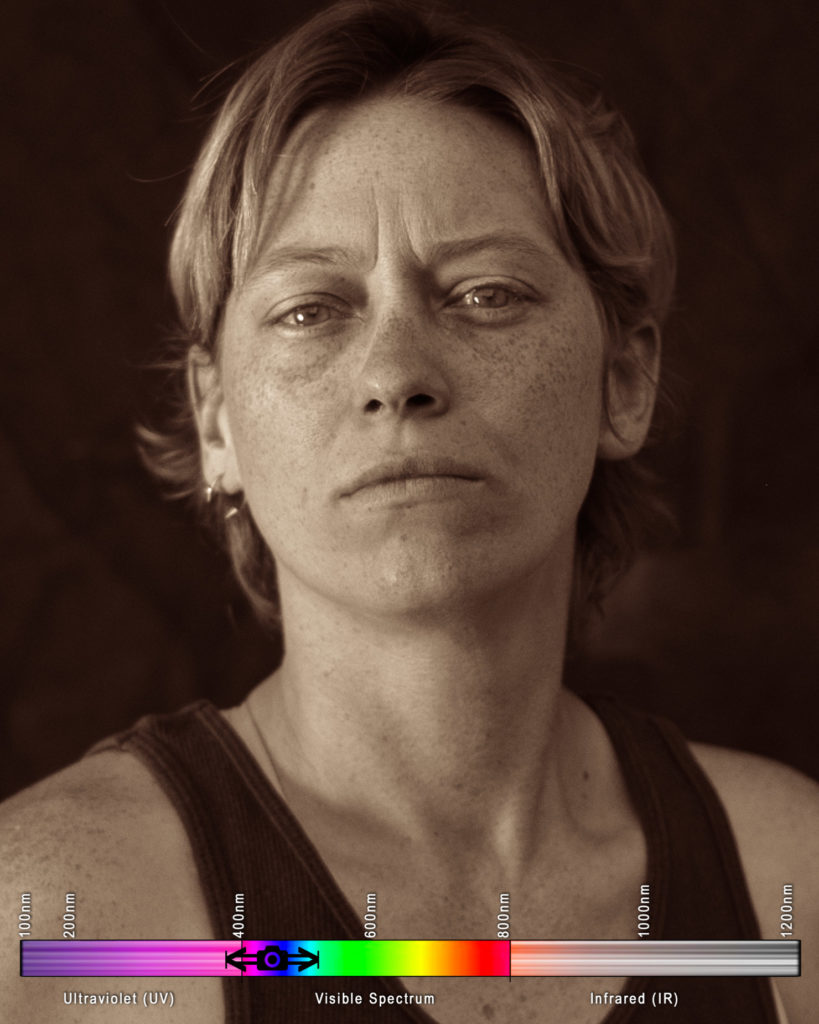

Here’s what our model Kayla looked like in the visible spectrum:

Notice her light freckles, dark shirt, and slightly light eyes

Orthochromatic Blue (Collodion AND Squid Vision)

This could be seen as the same spectrum that octopus and squid might view in. Humans, except for rare and awesome mutants and the colorblind, are “trichromatic”, with three cones that allow for three different ranges of wavelengths in. Cephalopods only have one- the S cone, which is sensitive in almost the exact fashion of the spectral curve of the 1850’s collodion (except for UV). Though they might have a different mechanical use of their eyes, which could allows for more color vision, but I digress…

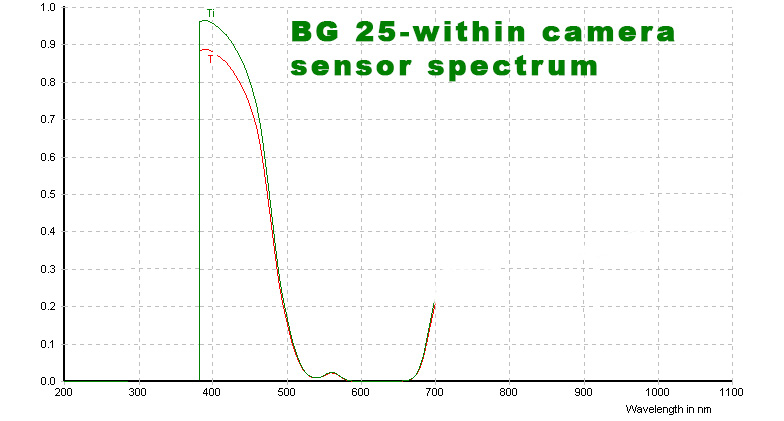

By using Schott BG25 bandpass glass, bought off a gentleman who fits esoteric german glass into filters, on a lens that cuts UV (any normal lens) on any unmodified camera, we get a theoretical spectral sensitivity that looks like:

Of course, all the photons that this can transmit that the camera can’t capture become irrelevant, so on an unmodified camera, we will get a spectral response that looks like:

This means that on an unmodified camera, using a lens that doesn’t block UV, we’re going to get an image where the focus is going to be more on near-UV damage to the skin- concentrations of freckles and blemishes are going to much more pronounced. There’s going to be a light leak of very red photons, and we’ll be missing much of the UV range, but we’re very close to vintage emulsion now.

Our model, under the same lighting conditions (with an exposure compensation of +.7, calibrated from an 18% gray card):

Notice her pronounced freckles, dark shirt, and very light eyes

By gum, we’ve done it! With that nanty narking, I think we can say we’ve captured the ol’ timey feel of collodion!

…but it is not scientifically accurate. This is about the best we can do on an UNMODIFIED camera, as we need some expensive modifications to allow UV to extend into the sensor. More on that later.

Let’s see what she looks like in some other, more bizarre wavelengths!

Near Infrared and Short Wave Infrared (Snake Vision)

This is, likely, what snakes see. This is the orange-and-red human-visible spectrum (near infrared, or NIR) and the infrared waves closer to the human spectrum. This is what I like to photograph foliage in, because it can be false colored and remapped using the color channels that your camera knows how to deliver data in. I have a Nikon D80 that has been modified to shoot in this wavelength, with an upper infrared spectrum bound (unmeasured) limited only by the physical limitations of a silicon CCD.

For some reason, for the duration of this experiment, this setup absolutely refused to focus properly. This camera does not have live-view, and it is very difficult to assess these images until they’ve been processed on a computer. Nonetheless, these blurry images were interesting…

Notice there are no freckles, and her shirt appears grey.

Short Wave Infrared

I don’t think anything “sees” solely in this spectrum, though it is a difficult concept to explore, so perhaps time will tell. To capture the full breadth of short wave infrared, or to move into longer wave infrared, we would have to use a special sensor made out of indium gallium arsenide. It’s interesting to see that any sun damage and melanin deposits, such as freckles and tans, will not appear in this wavelength. The IR photons do a good deal more sub-surface scattering in the skin than visible light, rendering each person as a waxen figure.

Notice there are no freckles, her shirt now appears white, her eyes have darkened considerably, and all of her skin appears waxen and soft.

Polishing these photos up a bit in a vintage style split-toning

You might have noticed these are not delivered in monochromatic black and white, as in the style of a silver gelatin process. Instead, I chose to render them out with a bit of split-toning. Since the birth of photography, this was a common practice, using various methods to bring color to the highlights and to the shadows.

In this case, I made the highlights gold to mimic the flame-gilding of the mid 1800s, which “fixed” the highlights of a photograph using a gold chloride solution and an open flame. As ambrotypes caught on in the 1860s, “ruby” hued backs became more common in photography- so I enjoy using a red tint in my lowlights.

A Horrible, No-Good Problem Arises in the bayer filter!

The images I captured occasionally showed very odd artifacts. Fine hairs would get lost, and appear in a fashion that almost looked like… a shadow? But why would such a thing happen?

Limited orthochromatic spectrum photo (right) renders the hair as a weird blobby shadow. Why?

Then I realized we’ve surpassed the intention of a digital sensor, and we’re paying the price.

All digital sensors are actually monochromatic- black and white. In order to capture color, a translucent, microscopic grid of red, green, and blue tiles is spread across the sensor, with one tile to each pixel. This is a “Color Filter Array”, or CFA. Conventionally, this grid is laid out in a “Bayer pattern”, named for the Bryce Bayer of Eastman Kodak, who conceived of this. This Red-Green-Green-Blue pattern mimics the human eye’s sensitivity (a range where two of our three cones’ sensitivities overlap), allowing for more green to be considered by the camera. Interesting fact, this was supposed to be Cyan-Magenta-Yellow, to better subtract out the wavelengths necessary, but the technology simply did not exist at the time of inception to make such a CFA.

The sensor still functionally reports the incoming light as black-and-white, but now the camera, or camera raw processor if you move it to a computer, performs a process called “demosaicing”, or “debayering”. This reconstructs the image in four pixel groups, using a variety of different methods, depending on the camera and the software, finally outputting RGB channels in full resolution.

This means every digital photograph you have ever taken has functionally been reconstructed from quarter-resolution samples! Obviously, we haven’t noticed it, so we don’t really mind, but when we’re missing an entire wavelength (red), and sharply attenuating another (green), we’re only effectively using one out of every four pixels.

Note only 1 in 4 pixels is appropriately captured.

Using RAWTherapee, the discovery of which was a definite win in this project, I could explore my sensor data before it was demosaiced and processed into the image I would see on a screen. As you can see above, it was disheartening.

What is a demosaic process supposed to use to rebuild the image? Without the appropriate data, it simply uses blank black pixels to try and interpolate data. This is going to get weird. Without writing your own demosaic algorithm, and accompanying debayering software to run it, there is no fix for this. (Update: I fixed it by writing my own demosaic algorithm and accompanying debayering software.)

Images captured in the “digital collodion” spectrum on a color digital camera will always get a little muddled when opened by your camera or photo editing software because of this simple fact. The luminance will be interpreted as ~60% less than it is, artificially flattening an image. Details will be lost, and reconstructed as goo.

Immediately after posting this I thought to look at the spectral response of each of the bayer tiles. It turns out the blue tile perfectly matches the orthochromatic technique, so I can effectively write an algorithm to simply crop the other 3 pixels, reducing this to a highly accurate 1/4 resolution. Updates to follow.

Update: Fixing the Debayer Problem!

After writing a surprisingly simple script in Matlab, which did an embarrassingly simple downsample to throw out the three pixels that were not relevant to the spectrum we captured this in, we have a solution. I cannot stress enough, we got lucky. This almost teaches bad color science- you typically cannot select a wavelength in post. We just got very, very, comically lucky and found that my Nikon D610 uses the same spectral sensitivities in the blue bayer tile as collodion once did. That is an odd coincidence, to say the least. This is worth researching more on later.

I will later rewrite this script for free interpreters, so that anyone can follow in my footsteps without spending money on expensive coding platforms. A full new article will be posted exploring this. Update: You can find the code, run on freeware, to perform this in my next post.

The Results are Stunning

The differences far surpassed what I expected. Pleasantly, the math suddenly matched up to what my light meter had suggested in my camera. Now, without any exposure adjustments, the photograph matches the levels of the full-spectrum photo- remember, I had already taken exposure compensation into consideration, allowing an extra 2/3s of a stop of light, when I took the photo.

There’s no bringing back the fine details- those little wisps of hair are lost to the ages, but they didn’t become a gooey mess. So much detail has been restored to the photo, I am absolutely stunned. I did not expect this level of improvement. I went back and reuploaded this spectrum in the earlier section with Kayla.

One more comparison:

The difference and detail recovered is nothing short of stunning.

Perhaps my short-wave infrared photos could benefit from this process as well?

All of the Photo Results

All results delivered as near to from-camera as possible, using small exposure adjustments to match skin tone. When gradient adjustments were used, they were used uniformly across all photos of the model, to keep lighting consistent between wavelengths.

Kaitlin

Kevin

Christine

Xach

Caitlin

Kayla

Next Steps to Take it Further

In order to fully and accurately capture the collodion sensitivity range, I need to not only use a lens that allows ultraviolet photons to pass, as used in this experiment, but a camera with a sensor modified to receive UV light. Because this is not a fast or cheap prospect, I’ve been dragging my feet to see if this could be done in conjunction with another modification…

As photographing humans draws no benefit from presenting false color, hyperspectral photography (such as UV) is best delivered in black and white. Due to the problems with the bayer pattern described above, having a color sensor actually hurts collodion-spectrum portraiture- if the construction of the image relies on a missing red channel, then we need to have a monochromatic sensor to gather an image using the filters described in this process with due sharpness. So we have two options (Update, a third option has arisen): Buy a monochromatic camera, which can run between 3,000 and 50,000 dollars, or do a “mono-mod” to scrape the bayer filter off of an existing camera. Conversion shops have assured me this is impossible on the Nikon cameras that I like to use, but I hold out hope for a “full spectrum” monochromatic modification. Alternatively, now that a custom debayer option exists that quarters the resolution, I can look into intelligent upscaling, possibly using AI to regain resolution lost in the process.

Final Thoughts

- As I used attractive models in this experiment, instead of the lab tools one should use to characterize optics, the spectral range of each photograph is an educated guess at best, based on theoretical readings. Lab characterizations will follow.

- Trust the math. I know things look underexposed and incorrect, but if you’ve set your exposure compensation based off an 18% gray card- or, realistically, ignored that and just set it to +.7, then you can trust your lightmeter shooting in “orthochromatic blue”.

- Don’t trust the Bayer filter. Its bad and should feel bad. Consider this in any hyperspectral pursuit, and any pursuit where you are limiting your input wavelengths via optical filter.

- Sometimes, there’s no substitute for the real thing- recreating wet-plate collodion and tintypes is severely limited by digital consideration- but limitation is the mother of invention!

02

2018In short: Astrophotography relies on trying to let the most light into your camera before the earth rotates too much and the celestial bodies begin to leave streaks- which is typically under 30 seconds, depending on your lens.

Finding a Dark Sky

Mastery of your camera makes absolutely no difference if you aren’t shooting in an environment with very little light polution.

Shoot Without the Moon

Ideally, you shoot between sunset and moonrise.

Don’t Forget the Weather

Living in California as I do, it becomes very easy to forget that weather exists. The skies are generally clear at night here, but when travelling this isn’t the case. Additionally, wind storms in the desert (the darker spots of California) kick up quite a bit of dust, which can become a cloying haze in even simple astrophotography.

Avoid Light Pollution

This is simple, check darkskyfinder.com and find a place that is very, very dark. Interestingly, these line up with ghost towns with some frequency.

Your Camera Settings

To better understand astrophotography, you need to assess the ideal settings, and then understand why those simply won’t work. Then we can dial in actual, pragmatic settings that will work.

The Ideal Settings

F-Stop

You want those stars to be sharp as possible, right? And your lens likely has the best ratio of sharpness to focal depth at f7.

Shutter speed

You want to let in as much light as possible, and with such a low ISO, you probably want to be

ISO

You want as little noise as possible, because in post, trying to differentiate between faint stars and noise may become difficult. Minimize noise by setting the ISO as low as possible, and the lowest possible is ISO100.

Stop Dreaming

Try these settings, and see what you get. Blackness, with no stars. Clearly these settings don’t quite work, but we can reasonably assume that the two most important settings are the shutter speed and ISO.

Actual settings that work

F-Stop

Open up that aperture as much as possible. You are desperate for every bit of light, so open it up as far as the lens will allow- f2.8 or lower, if you can.

Shutter Speed

Here you’ll need to use something called the “500 Rule”. Simply put, determine your maximum shutter speed time by dividing 500 by your lens’ focal length. I like to shoot on as wide a lens as possible for this reason, and with my Rokinon 14mm, I can shoot at (500/14), or 35 seconds. I cannot recommend the Rokinon 14mm enough for astrophotography.

ISO

You very likely need to set this near your camera’s max, try around ISO3200. The lower the better, obviously, but

Try to hit an Exposure Value (EV) of -7

The exposure value is a universal number for how bright something is. There’s a formula to calculate it. You don’t need to know it. You can use a calculator, and with a more in-depth explanation of astrophotography!

Consider your foreground

You might find that shooting the dizzying expanse of the milky way gets old quickly- without capturing the environment you are experiencing it in, often these shots lack impact.

Consider tree silhouettes, models on rock outcroppings, or interesting structures.

Think about painting the environment with flashlights, or sprinting around firing your flashes during the long exposures.

05

2017If you want to skip the boring stuff: IR photography is real complicated and involves breaking every conventional way you would use a camera. Here’s how to do it:

Infrared (IR) photography is kind of the opposite of photography. It’s important to know that both in form and in function, IR photography breaks all the rules of photography. Instead of capturing the world as you see and experience it, and how others might see and experience it, you are creating an image using wavelengths the human eye could never see. You aren’t forging a connection with the world- you’re breaking one.

As such, know that your camera, regardless of what it is, is not designed to capture, record, or process infrared. Absolutely every step of the process is either breaking or circumnavigating the way image technology is supposed to work.

What is Infrared?

Light, being a form of electromagnetic radiation, has a wavelength. The frequency of this wavelength dictates what color we perceive the light to be, largely due to how those photons (which, let’s not get into it, are particles that are also waves) tickle the cones in our eyes. We have three types of cones, and if you plot out the tristimulus model for light perception, you see that the three different cones allow us to visualize color fairly evenly-ish between 390 to 700 nanometers. But, of course, waves can exist well outside this boundary. The shorter wavelengths, below 390nm, are event shorter than violet, and are commonly called “ultraviolet” (UV). The longer wavelengths, above 700nm-ish, are longer than red, and would be called “infrared”. Our eyes can’t see these, but an electronic sensor theoretically can! In fact, through a process called “hyperspectral imaging”, you can capture whichever wavelengths you want- with a sensor designed appropriately!

This includes Near Infrared (NIR), Shortwave Infrared (SWIR), Medium-wave Infrared (MWIR), Longwave infrared (LWIR), some acronyms you might see on more pedantic websites.

Of course, any image you capture in non-visible light needs to be re-mapped, or “false colored”, so that you can present the image in a visible spectrum. The wider range of wavelengths you have captured, the more you have available to remap.

Why Can’t My Camera Shoot Infrared?

The sensor on your camera can fudge fairly far on either side of the visible spectrum. Obviously, it did not behoove any camera sensor manufacturers to tune a a sensor to capture wavelengths outside of what we’d be able to see, so instead they created sensors that roughly hit about where our eyes would see. Roughly. There’s some wiggle room, quite a bit of wiggle room, on both sides of the visible spectrum..

But it would do your capture process no good if you had all these spare photons bouncing about, especially as those photons are needlessly imparting a good deal of heat energy. As such, the camera manufacturers place a dichroic filter, or “hot mirror” in front of the sensor that bounces that useless IR light right back out of the lens. These can also be called an “Optical Low-Pass filter”, or OLPF. There’s also a nice piece of glass, well, quartz, actually, sandwiched over the OLPF, which creates a blurring/anti-aliasing effect that a normal photographer would find necessary to keep from generating slight bizarre artifacts in fine detail. Basically, you’ve got an optical wafer in front of your sensor that keeps your camera from recording wavelengths beyond human vision, and this wafer can take different forms with different sensitivities depending on the camera. Sometimes they don’t work so perfectly, which allows us to perform some trickery to capture IR on an unmodified camera!

Forcing a Camera to Shoot Infrared!

Without modifying the camera

Depending on your camera, the imperfect OLPF might let in enough photons to capture IR, if you set your exposure long enough! In fact, some cameras don’t even bother blocking IR until it passes 750nm, though most begin to cut around 700.

What this means is, if you purchase an IR filter above 700nm, such as the cheap and easily-available R72, which will prevent any photons UNDER 720nm from passing, you can simply set your exposure to a much longer period, and let those photos that sneak past the interior OLPF just trickle in. You could call these filters optical high-pass, longpass, or infrared bandpass, and in my experience they seem to be far more effective than the optical lowpass filter inside your camera, which is why the long exposure trick works.

This has a few problems

- You won’t get much of a color range to false color later. Wavelengths under 720 disappear, obviously, with an R72. You can’t really use a shorter wavelength filter, because your camera is going to be far more sensitive to the 500-700nm wavelenths that aren’t being cut by the OLP. That means if you used, say, a 560nm filter, you’d get a crapton of “red” photons before you ever got an adequate amount of IR photons.

- You can’t autofocus with the filter on.

- You can’t really see through your camera at all. Because the IR filter blocks visible light, if you look through the lens you’ll simply see black.

- You’re going to have to find the proper exposure through trial and error.

- Because you are functionally shooting long-exposure, even if only a quarter of a second, you are going to experience blur on anything moving, and will need a tripod to combat camera shake.

The actual steps to shooting with an unmodified camera and a highpass lens filter are simple

- Compose your shot on a tripod and set focus.

- Affix a 720nm high-pass lens filter.

- Make up some wackadoo exposure (I’m sure there is a multiplier for your normal exposure, but I haven’t figured this out yet). I found ¼ at ISO320 f2 to work well.

- Shoot, ideally with a cable release to prevent camera shake

- “Chimp” and see what the camera actually recorded. You should see a deep red image with black blacks and near-white highlights. If you don’t, adjust your exposure and try again until it works.

- Go on to the steps to channel swap and process the photo. (post inbound, stay-tuned!)

Modifying the Camera to Properly Shoot Infrared

If you want to remap the infrared spectrum between 560 and 1000nm, you are going to have to modify your camera and tear out the optical lowpass filter deep inside its guts.

I do not recommend attempting to modify your camera yourself. I work in a lab where we perform research and development on image capture devices, so I, in theory, have more resources than the normal human to perform such a conversion, yet I deemed it too risky to perform myself. I seldom deem anything too risky to perform myself. But this isn’t a simple tooth extraction or appendectomy.

If you accidentally discharge a capacitor in your camera while tinkering, you could die. Literally, no-foolin’, no takesies-backsies, insta-death. But, laughing in the face of death as us photographers so often do, let’s move on to an even bigger risk.

Your camera might never achieve focus again.

Really, if you give anything a good knock, shock, or sneeze at the wrong time, your camera is not only not going to shoot IR, it’s never going to shoot anything ever again. It will cease to be a camera, and become a conversation piece about what could have been.

Moreover, the replacement glass you need to take the place of the OLPF inside your camera is expensive and difficult to find- you’re going to need to order it from a company that will do these conversions for you anyway.

Here’s ultimately what needs to happen: You need to get that OLPF filter out of your camera, and have it replaced by some piece of glass that can allow infrared through. That glass needs to have a similar, perhaps identical, thickness to the OLPF that has been removed, else you’ll need to recalibrate focus, which I do not know how to do on the scope we are talking here. Any conversion service will handle that for you.

You can replace the OLPF with a few things.

- Clear, no-filtering-whatsoever glass. Your camera now captures all of the light spectrum, forever. This seems the most attractive option at first glance, because you can now shoot UV, NIR, IR, or any crazy thing you can dream of, so long as you have the correct filter placed over your lens, which you can easily change.

- UV lens filters are so expensive that’s it doubtful you’ll want to drop an additional $600 on a filter, after having paid for this conversion (and I’m not certain the OLPF got in the way of using this anyway).

- Like shooting with a pre-conversion camera with a lens filter, you’ll be unable to look through the viewfinder.

- Like shooting with a pre-conversion camera with a lens filter, you’ll be unable to use autofocus.

- You will require a bandpass filter for every lens you want to use.

- Ultralowpass filter – Pass ~214nm to ~360nm, block everything else. This range is ultraviolet, and nothing else. I’m including this in the tutorial because it’s the same philosophy, but this glass is hard to find and prohibitively expensive. I have no experience with this, nor have I ever met anyone who does, so I am speaking hypothetically.

- IR bandpass filters- Using these will negate a need for a filter over your lens, and you won’t need a filter for every lens you own.

The two main companies seem to be Kolari Vision and LifePixel. I myself sent away to have my Nikon D80 converted by Kolari.

The actual steps to shooting with a camera modified with an internal IR bandpass filter are even simpler, but still have some caveats

Set an exposure compensation. This may differ based on your camera, but I use around a -.7, and from there I can use my light meter as if it were completely normal.

Keep you ISO at 100. Always. Seriously. The slightest bit of noise is going to destroy this.

Shoot with prime lenses, and don’t trust the focus through the lens!

- All wavelengths of light have a different index of refraction, or IOR. Anyone outside the field of optical engineering probably won’t need to think about this, but it is why we see rainbows when white light hits a prism. Your camera lenses are designed with this in mind, and specially tuned so it doesn’t cause problems. They are NOT, however, designed with infrared in mind. This is speculation on my part, but I have found, with much frustration, that my focus is sorely impaired with all of my zoom lenses. I have a 12-24 DX lens that somehow has an incredibly shallow depth of field, even completely stopped down, when used on my IR camera. This might vary from lens to lens, but you will likely find that you need to shoot with prime lenses in order to avoid missing focus. Break out that 50mm, because, as usual, it’s going to be your workhorse lens here.

So here are my final notes on IR photography.

- Noise becomes very evident after a channel swap, so try and shoot everything at ISO100.

- A wider range of wavelengths means more mappable colors

- Anything above 720nm will likely need to be presented in grayscale.

- 720nm will give you slightly pink-or-gold leaves and blue everything else

- 560nm will give you golds, pinks, and blues with the proper post-processing, but you are including half the visible spectrum at this point, so you might lose some of your science-y bragging rights.

- Shooting on an unmodified camera has underwhelming results

- Shooting with an external high-pass filter is not very fun, as you can’t even look through the viewfinder.

- If your camera is modified, you use an external high-pass filter in “live view”.

- Shooting on a modified camera with an optical high-pass filter directly over the sensor allows you to focus, look through the lens, and shoot normally.